Using a Kano-based survey, you can avoid false positives (“Oh, sure I'd totally use that!” ... never uses it) and identify the features your product actually needs to have to satisfy and delight your customers.

This is part two of a three-part series exploring the Kano Model and how use it to build software products your customers will love.

If you're unfamiliar with the basics of the Kano Model, start with part one to understand what the Kano Model is and what it can help you measure.

Creating a Kano Model Survey

Now that we understandwhat the Kano Model is attempting to explainKano Model (Part 1): Understanding the Kano Model and Customer SatisfactionA breakdown of the Kano Model, its components, and how it helps businesses understand customer reactions to product features for better decision-making. – and assuming we agree – how do we go about determining which features are 😍 delighters, 😐 must-haves, etc.?

Obviously, the answer will come by “getting out of the building” and asking your customers and potential customers what they think.

Don’t ask, “Would you use _ if we added it?”

In chapter 4 of her book, Lean Customer Development, Cindy Alvarez offers some good advice on focusing on actual current behavior from potential customers, versus aspirational future behavior. It’s pretty well established that asking people, “How likely would you be to use ___” will lead to a disproportionate number of false-positives and wasted development cycles building features people don’t adopt.

During qualitative, face-to-face interviews, we can ask questions framed as, “Tell me about the last time you ___.” to ensure we’re getting at actual behavior and aren’t leading the witness.

So, with a Kano-based survey, we mitigate against aspirational “Oh, sure I’d totally use that!” responses by asking both a positive and negative question about the same feature or requirement.

Instead, measure how they would feel if it existed and if it didn’t

“If you are able to sort your search results alphabetically, how do you feel?”

- I like it!

- I expect it.

- I’m neutral.

- I can live with it.

- I dislike it.

and then:

“If you are not able to sort your search results alphabetically, how do you feel?”

- I like it!

- I expect it.

- I’m neutral.

- I can live with it.

- I dislike it.

How do you interpret Kano Survey responses?

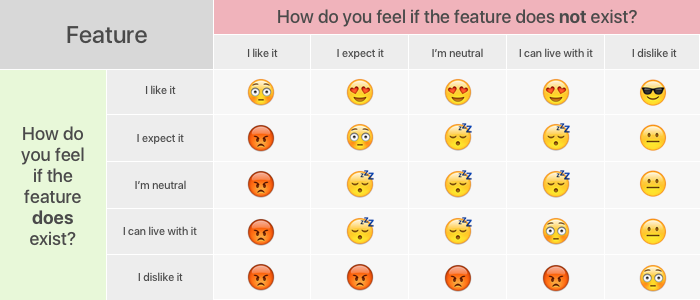

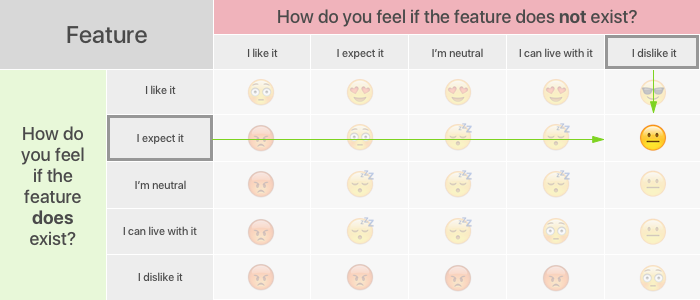

We then use both responses to identify the feature type according to that user like so:

So, in the case where a respondent answered “I expect it” to the positive question and “I dislike it” to the negative, we can see that for this particular user, that feature is a 😐Must-Have.

Quick aside. You’ll notice that four of those spaces have a new category: 😳. This indicates a Questionable response because the user shouldn’t really like it when a feature does not exist but also like it when it does exist. We won’t necessarily say they’re lying, but it certainly leads us to believe that maybe they weren’t paying close attention to the question.

How to Record Kano Survey Responses

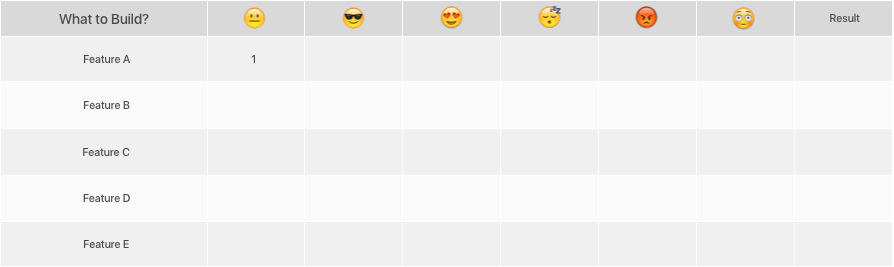

We’ll record that response in our handy-dandy response tracker table – also known as a spreadsheet.

Then, we repeat that two-part question for each of our features with a batch of customers and let them tell us where each feature belongs.

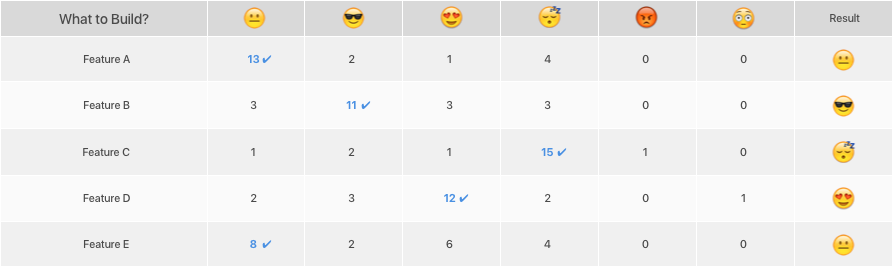

In this hypothetical example, Feature A received:

Must-Haves: 13

One-Dimensionals: 2

Delighter: 1

Indifferent: 4

Reverse: 0

Questionable: 0

So, it’s a Must-Have! Build that thing!

How Many Kano Survey Responses Do I Need?

When it comes to qualitative interviews, it’s easier to go on feel and base it on something like, “When we’re no longer hearing new things that surprise us, we’ve probably got enough actionable info from this batch of interviews.”

But with this quantitative measure, we want to be sure we’re not drawing conclusions from too small a sample size. I haven’t come across a well-documented standard for a minimum number of responses, but several trustworthy practitioners have suggested that between 15-20 responses usually starts to reveal some truth.

And use your judgement, of course. In the example above, we can be pretty certain that Feature A is a Must-Have (13, 2, 1, 4), but Feature E isn’t as clear (8, 2, 6, 4). If you see that kind of “close call” pattern emerging, consider digging in on the use case of that feature in your next fewcustomer interviewsPreparing for Success in Jobs to Be Done Interviews: Tips and TricksGet ready to conduct effective Jobs to Be Done interviews with our preparation guide, including understanding the Forces of Progress, coming prepared with notes, and learning from existing interviews. and perhaps you’ll have a better sense for where it may belong.

Get Started!

That’s enough to get you going in the right direction! If you have any questions or comments, don’t hesitate to reach out.

For now, identify your feature set, create a survey, gather responses, and be open to what the data tells you!

Free 15-Minute Consultation

Are you building a product and need some guidance deciding what to build, what to ignore, and what to add later?

Schedule a free 15-minute conversation and I will help you get unstuck so you can start making progress!

- Part One:Understanding the Kano ModelKano Model (Part 1): Understanding the Kano Model and Customer SatisfactionA breakdown of the Kano Model, its components, and how it helps businesses understand customer reactions to product features for better decision-making.

- Part Three: Charting the Results (coming soon)