In this post, I’ll show you step-by-step how I’ve helped my clients know what to build, what to improve, what to leave alone, and what to ignore.

I’ll show you how to use customer interviews to design your Jobs to Be Done survey questions and provide a Jobs to Be Done survey template to make it as easy as possible.

I’ve executed this process for clients as large as LEGO and Minecraft, as well as startups just closing their Series A or still raising seed capital. No matter where you are in your product design journey, the research process I describe below will save you and your team valuable time, energy, and money.

By the end of this article, you'll be able to run this process yourself! Or, if you'd prefer, reach out and I'll do it for you.

Background

A client dreamed up an app but struggled to focus its wide-open potential into a coherent v1. Where do you start when the possibilities seem endless? I led structured customer interviews to identify the core user needs. My insights anchored the team in what matters most: solving their customers’ pain points.

The research illuminated the app’s highest-value opportunity. The team now had their North Star for the first release.

This proven methodology - honed through years of practice - brings clarity to complexity. I distill the fuzzy front-end of product development into clear, actionable truths.

I’ll go into the details for each part of the process I used to identify their most pressing customer needs, but for the skimmers, here’s the gist:

- Run some customer interviews to gain a better understanding of the customers’ Job to Be Done.

- Split the Job to Be Done into steps.

- Survey the importance and satisfaction of each step of the Job to Be Done.

- Visualize the survey results with a web app I’ve built specifically for this type of survey.

Measuring What Customers Want

I’m most excited to share the visualization of the survey data, but first, we need to start with the Job to Be Done, its sub-jobs, and a way of understanding the criteria customers will use to judge how well they’re getting the job done.

For example, if a customer’s main job is to “share beautiful pictures”, sub-jobs could be “crop the photo,” “add a caption,” and “add a filter.” But how can we measure which of those sub-jobs are worth investing resources into?

The solution lies in asking paired importance/satisfaction questions for each sub-job, and then focusing on the sub-jobs that are extremely important, and extremely unsatisfied.

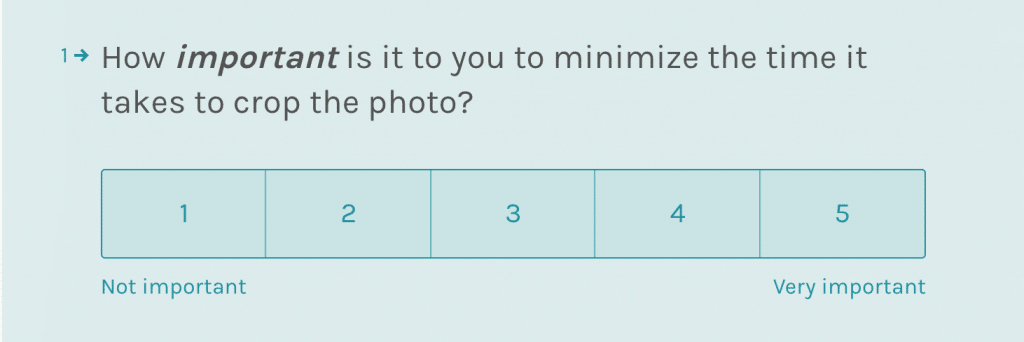

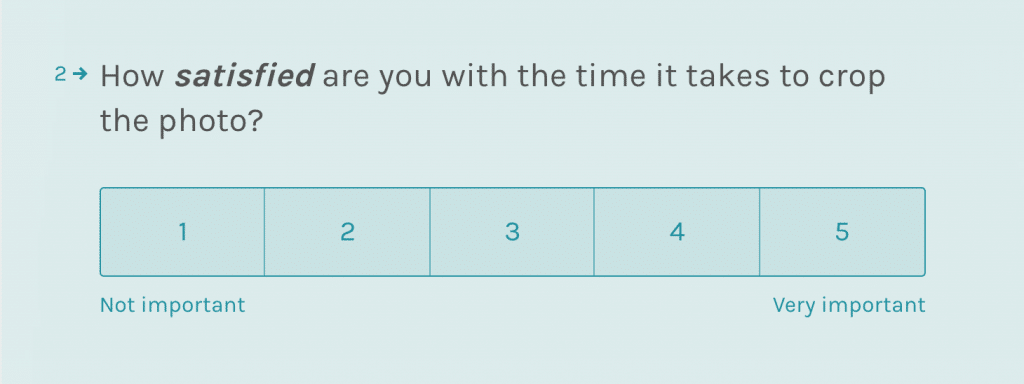

Let these two simple questions guide you in prioritizing your product roadmap:

- How important is this desired outcome to your customers?

- How satisfied are they with current solutions?

The answers reveal where you should focus your efforts for maximum impact.

Unimportant and satisfied? Don’t waste time reinventing the wheel.

Important but satisfied? Include it to delight users.

But pay special attention when a feature is crucial yet unsatisfying. This signals an opportunity to stand out from competitors. Fixing pain points here can win business and loyalty.

Approaching prioritization this way brings clarity to confusion. No more guessing which features might populate an MVP. No more wrestling over backlogs. Just a clear compass to build what matters most to your customers.

The path forward starts with understanding their needs. Let these questions guide you to high-impact opportunities. Then seize them decisively to help your customers accomplish what matters most.

Visualizing the Responses

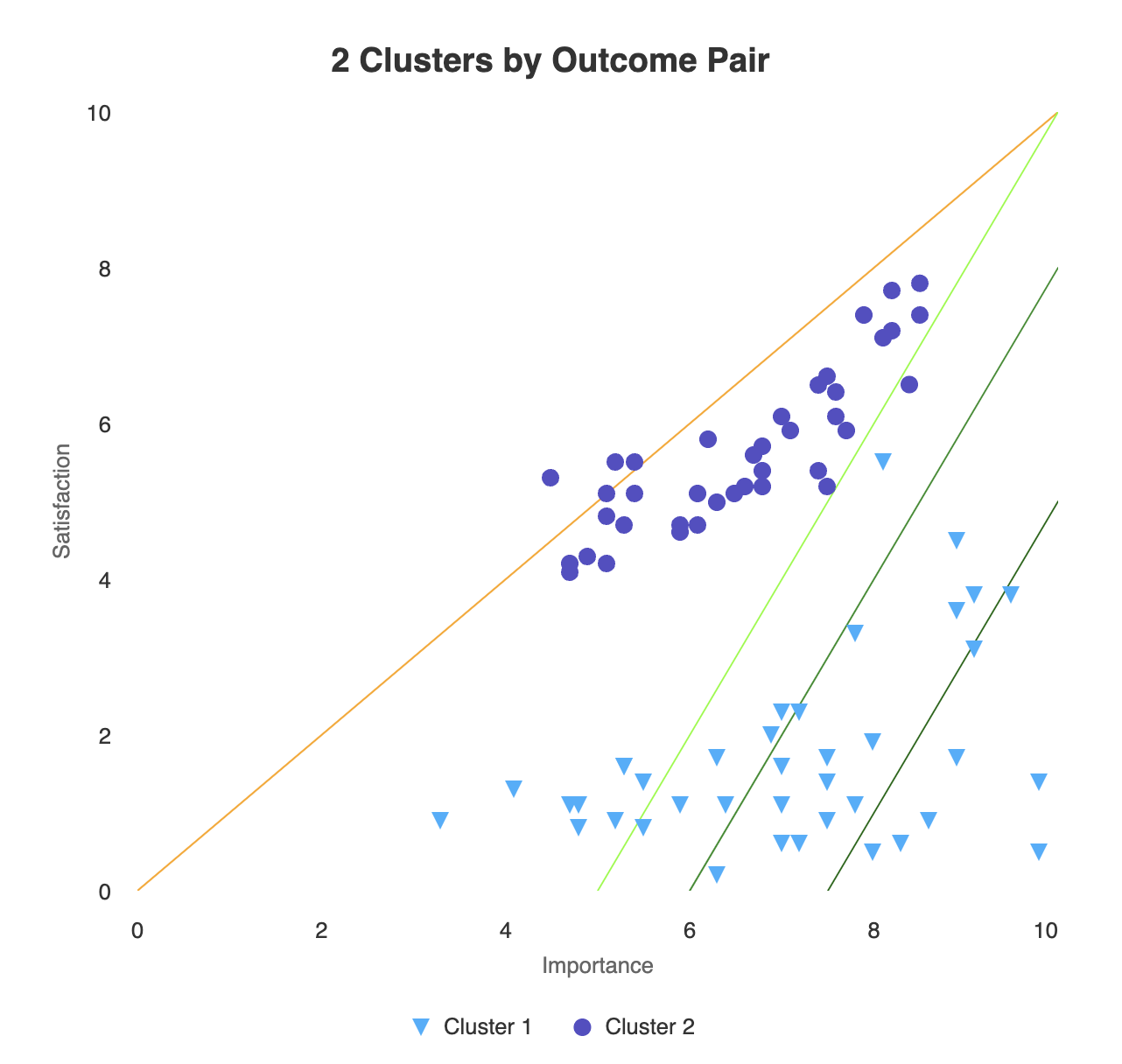

For this particular client, we used Typeform to deploy the survey and I’ve developed an application to analyze the results, and perform a k-means cluster analysis to further amplify the opportunities for product innovation.

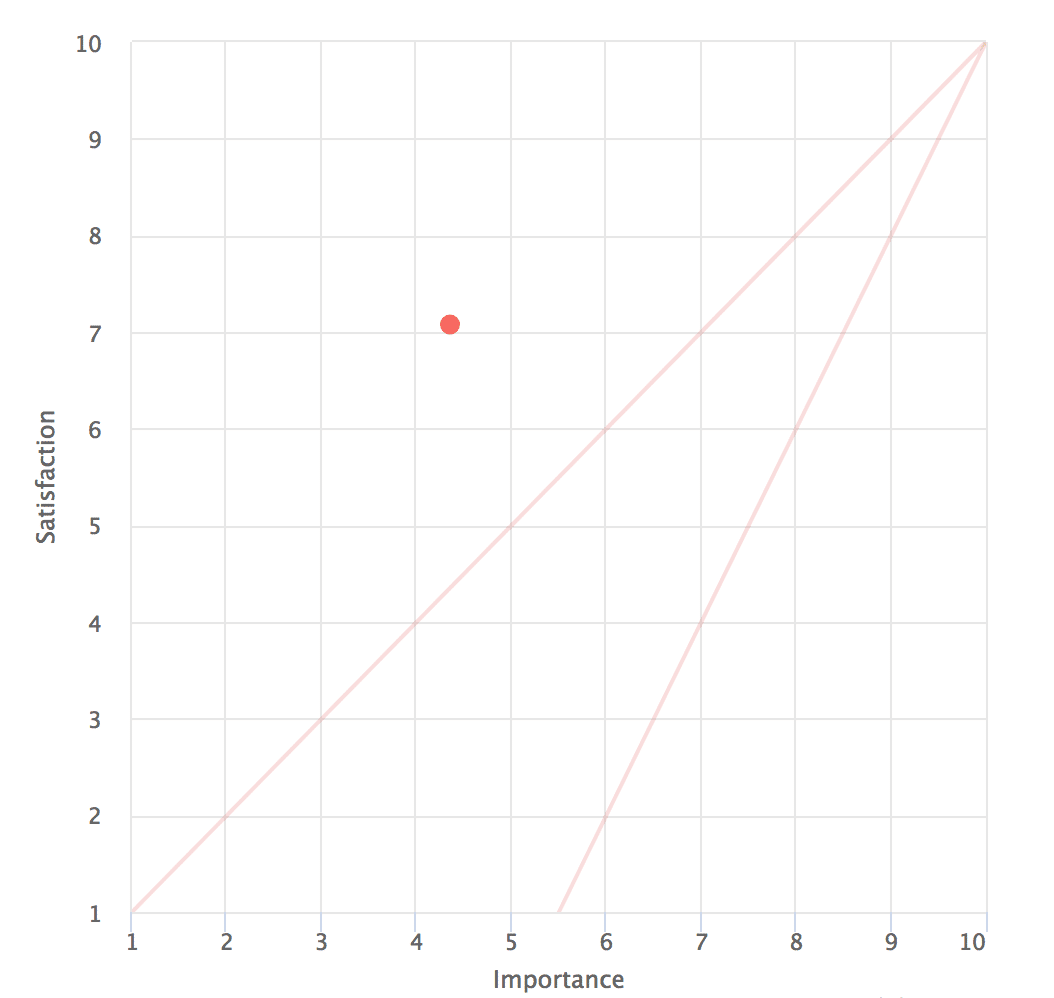

To calculate the importance-satisfaction plots on the chart above, first determine:

- The percentage of respondents who ranked a feature as “Very” or “Extremely” important. This represents the importance rating on a 0-10 scale.

- The percentage who ranked themselves as “Very” or “Extremely” satisfied with current solutions. This is the satisfaction rating.

For example:

- 15% said a feature was very/extremely important. So the importance rating is 1.5.

- 60% were very/extremely satisfied. The satisfaction rating is 6.0.

By converting percentages to 0-10 scales, you can plot each feature’s importance vs. satisfaction on a quadrant chart. This visualizes where opportunities exist based on user needs.

This process translates survey responses into clear, actionable guidance. The importance-satisfaction analysis shines a light on where your product can best delight customers and where there is opportunity for innovation.

Prioritization Just Got Easier

Imagine that prior to conducting this type of survey, you had those 29 ideas on your whiteboard. “What should we build first?” you wonder aloud to yourself or your team.

Now take a look at that chart and ask yourself the same question:

“What should we build first?”

A little bit easier, right?

So how do you get there? Let’s dig in.

The Process

- Interviews

- Surveys

- Analysis

1. Interviews

First, we conducted somecustomer interviewsPreparing for Success in Jobs to Be Done Interviews: Tips and TricksGet ready to conduct effective Jobs to Be Done interviews with our preparation guide, including understanding the Forces of Progress, coming prepared with notes, and learning from existing interviews. (I recommend between 8-12) that ran about 45-minutes each. We walked away with a pile of quotes, thoughts, validations, and invalidations that we would carry with us into our analysis of the survey we’d be sending during the second phase of the research.

The goal during these interviews is to understand the customer’s desired outcomes when they’re using your product so that you can ask an Importance/Satisfaction pair against each outcome and plot all their positions on the chart we saw above and your prioritization woes will be greatly diminished.

During yourcustomer interviewsPreparing for Success in Jobs to Be Done Interviews: Tips and TricksGet ready to conduct effective Jobs to Be Done interviews with our preparation guide, including understanding the Forces of Progress, coming prepared with notes, and learning from existing interviews., many of the desired outcomes and steps your customers share will be completely expected.

But, you’re also very likely to be shocked along the way and this is one of the most valuable things about doing these interviews.

If you’ve got an existing product I can almost guarantee that your customers are using it in ways you didn’t expect or design for. When you stumble on these instances, don’t let them slip away! Figure out why they’re “misusing” your product and hacking their way to a better experience. There’s probably something valuable in there!

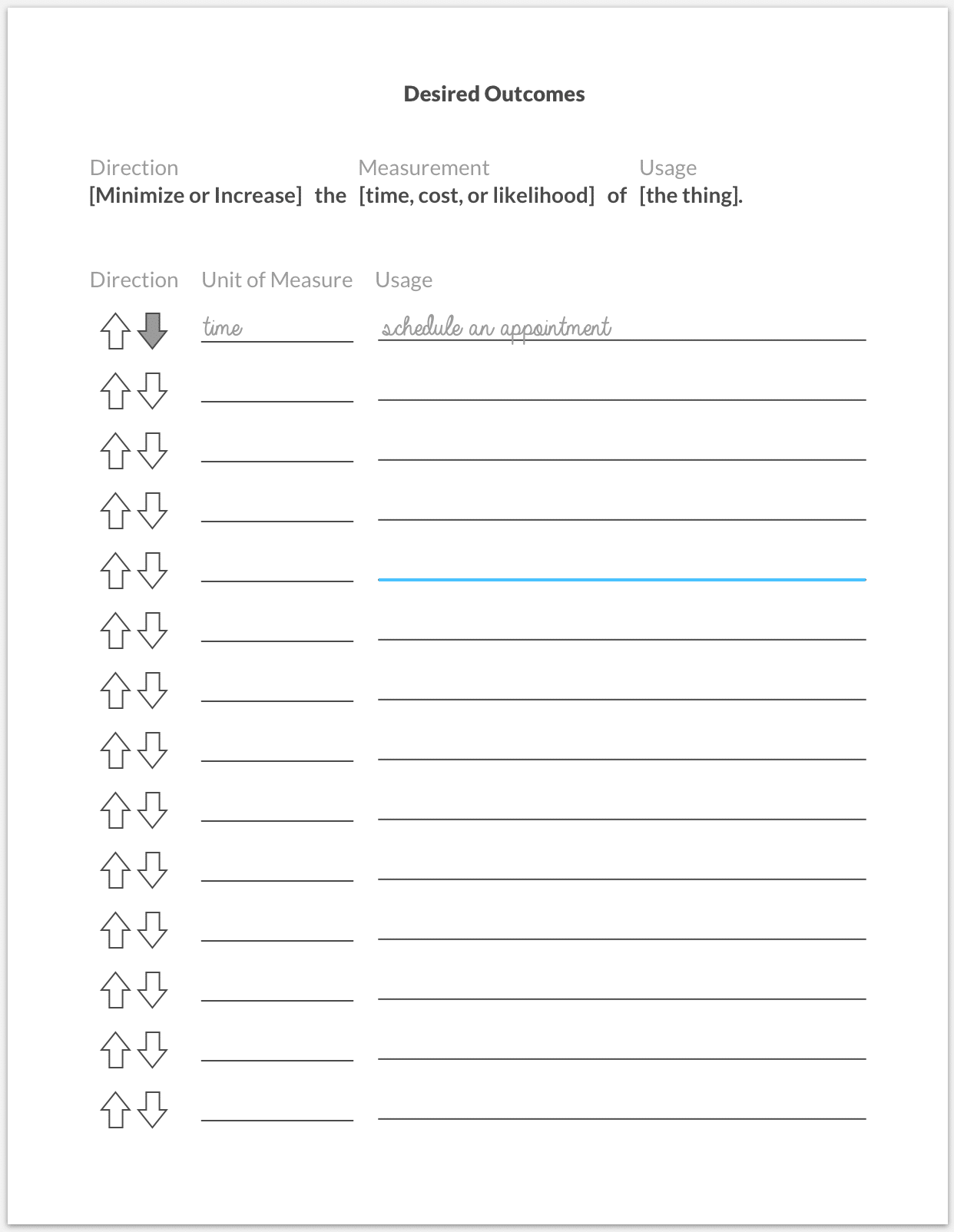

How to Record Your Customers’ Desired Outcomes

When your interviewee details a step in their task, aim to understand their end-goal for that step.

Strategyn terms this the “Desired Outcome Statement”, providing a clear framework to pinpoint this goal:

Your customer wants to:

- Minimize or Increase,

- The Time, Cost, Frequency, or Likelihood,

- Of a specific action.

For instance, if a customer using your photo app mentions “cropping” as their next step after snapping a photo, don’t merely ask:

“Is cropping vital for you?”

Instead, delve deeper into their motive behind cropping. This uncovers areas to refine their experience and improve your product.

Ask, “What’s your reason for cropping photos?” A typical answer might be to improve the image’s layout. Reframe this insight:

“So, cropping the image increases the likelihood of a more aesthetically pleasing photo composition?”

From there, formulate focused survey questions:

- “How important is it to increase the likelihood that your composition is visually appealing?”

- “How satisfied are you with your ability to increase the likelihood that your composition is visually appealing?”

If the responses come back as very important and very unsatisfied, now you’ve got a chance to brainstorm some interesting options with far more impact than, “Do we need a crop tool?”

Here’s a handy little PDF you can download that I like to have with me on these calls. It makes it a lot easier to keep a record of all the outcomes you’ll collect on your calls.

2. Surveys

You’re going to come out of your customer interview process with a ton of quotes, stories, and ideas; and equally important, you’ll have built up a list outcome statements that will make up your survey.

Build Your Survey

Typeform is far and away my favorite survey tool on the market. The UX for participants is unparalleled and their API for retrieving survey responses is stellar.

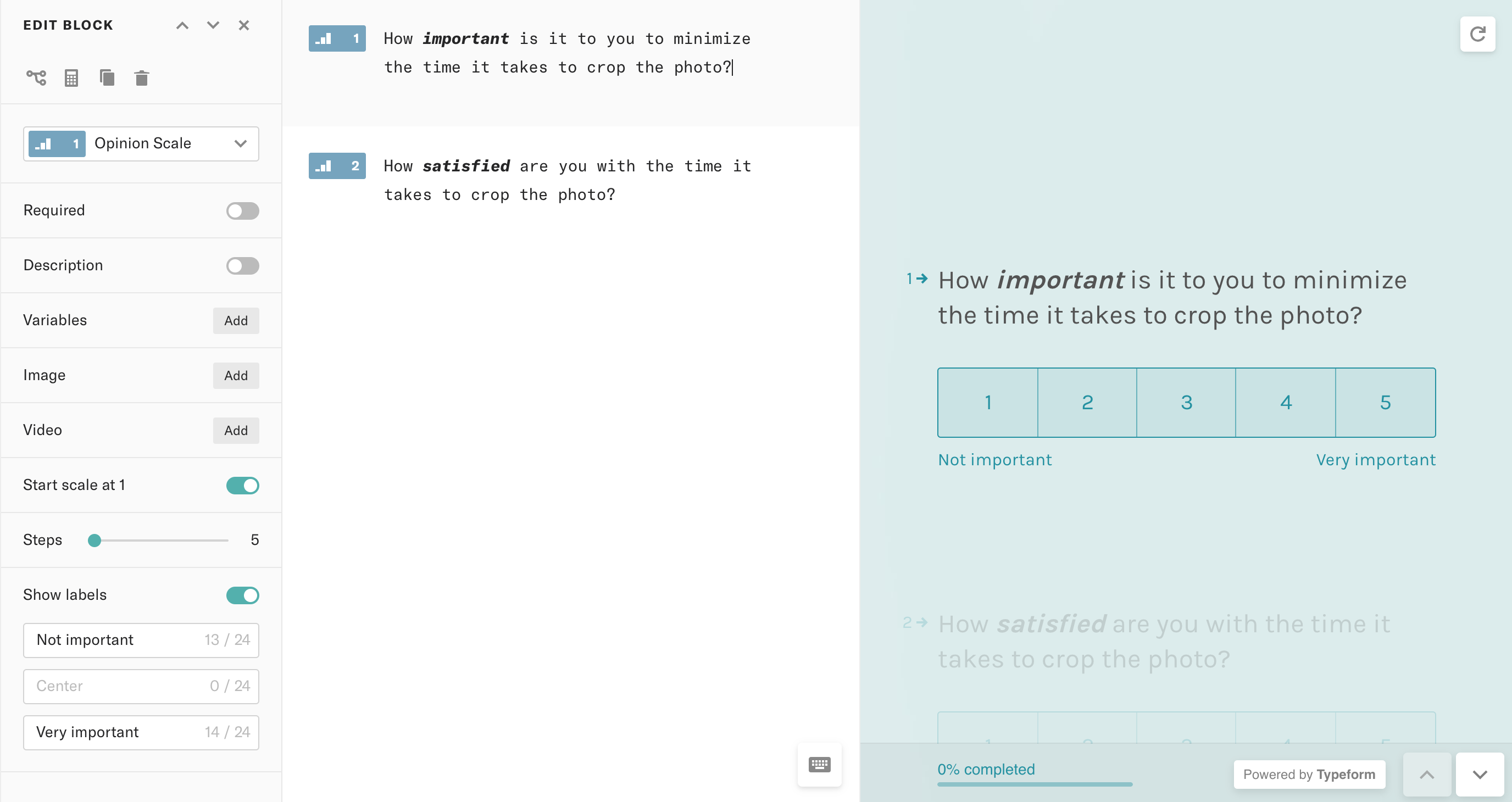

Create each pair of Importance/Satisfaction questions using the settings seen below:

- Question type: Opinion Scale

- Start scale at 1: true

- Steps: 5

- Show labels: true

- Left label: Not important

- Right label: Very important

Devs and Technical Founders: You can save a ton of time by using Typeform’s API instead of creating every single question by hand. Create one set of paired responses like you see above, then retrieve the form, use your favorite code editor to duplicate and edit the questions, then update the form with the new questions.

Ship that Puppy!

Have a couple friendlies fill out the survey after you’ve got all your questions loaded in. You want to be sure that the results are being saved and having some extra sets of eyes to proofread your work never hurts.

Once you’ve confirmed that it’s good to go, get it in front of your customers or prospects and start collecting results.

For my client’s project, we sent the survey to everyone we’d interviewed as well as several dozen additional customers to ensure we had a reliable set of results.

3. Analysis

After you’ve begun collecting responses to your survey, you’re ready to begin analyzing the data.

Regardless of the tool you use to do this piece of the work (Excel, web app, R), you determine the X and Y coordinates of each outcome statement using this calculation:

- X-axis: % of respondents who rated this outcome a 4 or 5 in Importance * 10

- Y-axis: % of respondents who rated this outcome a 4 or 5 in Satisfaction * 10

For example:

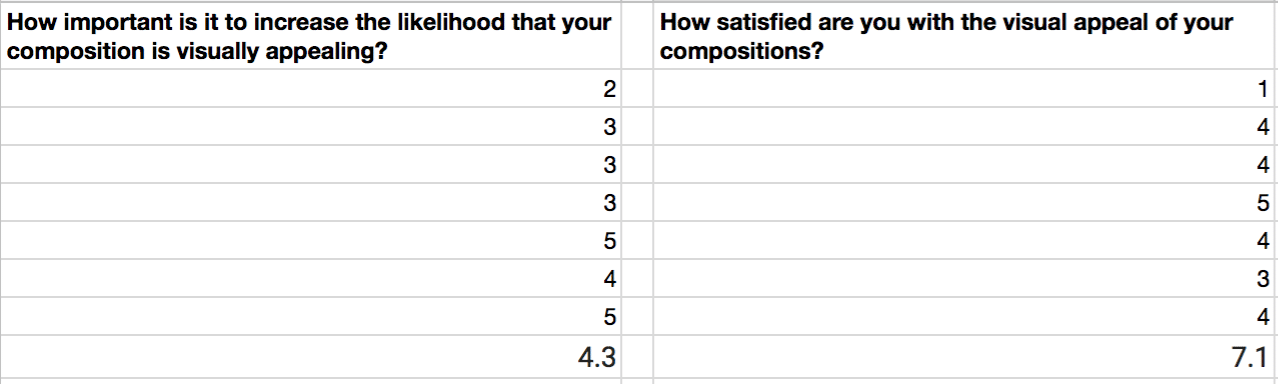

In the image above, we’ve got seven imaginary responses to the question about making the compositions more visually appealing in our “Share beautiful pictures” app.

3 out of 7 people responded with a 4 or 5 regarding its importance, which is roughly 0.43. We multiply that by 10 to give us a single digit coordinate on the chart of 4.3.

Perform the same calculation for satisfaction and we arrive at 7.1.

This would mean that we’d plot this particular outcome statement right about here:

Repeat this calculation for all of your outcome statements and you’ll be much more equipped to start making some decisions around roadmap prioritization.

Conclusion

As you can imagine, with all of the feedback we’d collected from the customer interviews combined with the analysis from the surveys, my client was able to make much more informed decisions about where to spend their time, money, and energy.

All of the Above: Done for You!

Hopefully, you’re empowered to deploy an actionable JTBD survey to back up all your qualitative interviews!

But, if you’re in a position to work with a seasoned pro who has done this a hundred times for companies all over the world, Contact Me, and let’s get this done.